Geometric 3D reconstruction augmented with Deep Learning features

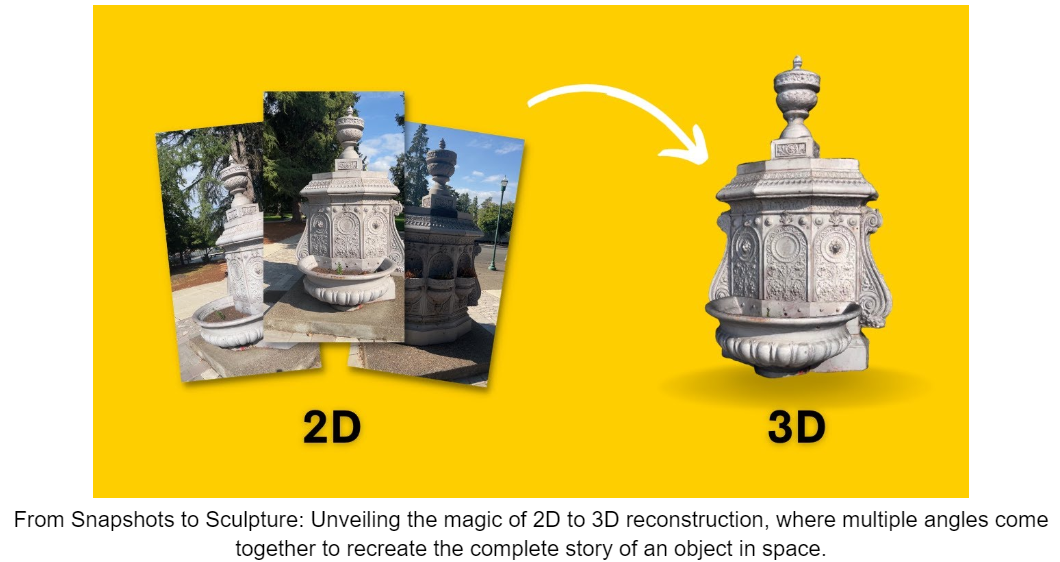

Overview of My Geometric 3D Reconstruction Project

In this project, I explored geometric 3D reconstruction, focusing on converting 2D images into detailed 3D models using sophisticated algorithms. My aim was to achieve high accuracy and enhance the density of the 3D models from various image sources.

Technical Approach and Methodology

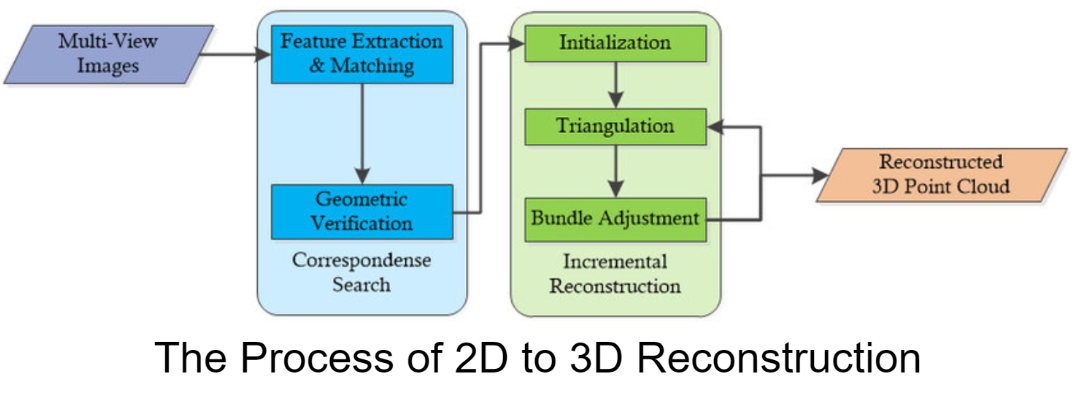

I initiated the process by calibrating camera parameters and implemented feature detection and matching using advanced techniques like Scale-Invariant Feature Transform (SIFT) and Oriented FAST and Rotated BRIEF (ORB). I utilized essential matrices and triangulation methods to calculate camera poses and extract 3D points, managing to reduce the reprojection error significantly.

Deep Learning Integration

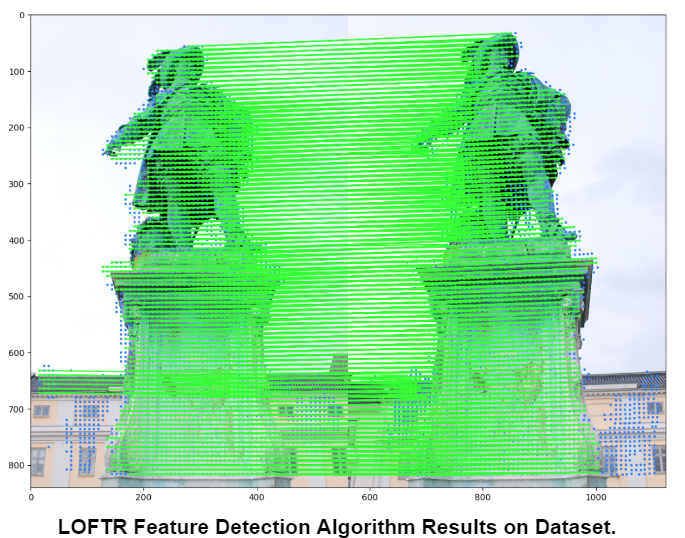

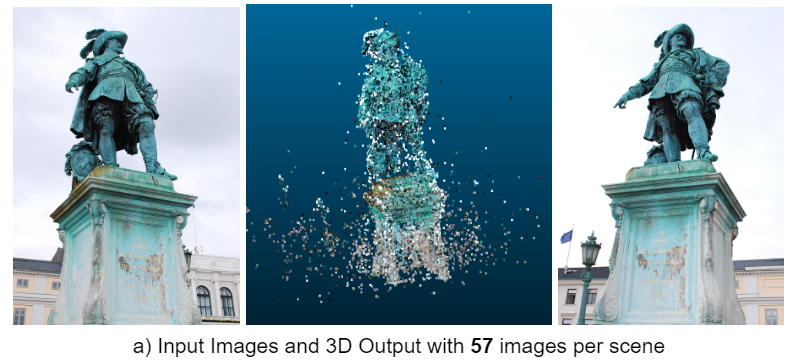

- LOFTR Features: By integrating LOFTR (Local Feature TRansformer) features, I was able to detect a substantially higher number of features. This approach leveraged convolutional neural networks and transformers to produce denser 3D reconstructions.

- Dense Matching: The inclusion of dense matching algorithms augmented the model’s output, allowing for extensive scene coverage and enhanced handling of occlusions.

Results and Quantitative Analysis

The application of bundle adjustment refined the camera parameters and 3D point positions, leading to a more precise 3D point cloud with a notable reduction in reprojection errors.

Future Directions

I plan to further this research by incorporating more sophisticated deep learning models for feature matching, aiming to achieve higher accuracy and environmental adaptability.

This project is a significant contribution to the field of computer vision and 3D modeling, demonstrating how traditional algorithms and modern deep learning techniques can be synergized for nuanced and detailed reconstructions.